State of AI for Coding: Power, Speed, and Security Risk (January 2026)

Written by Rafter Team

February 1, 2026

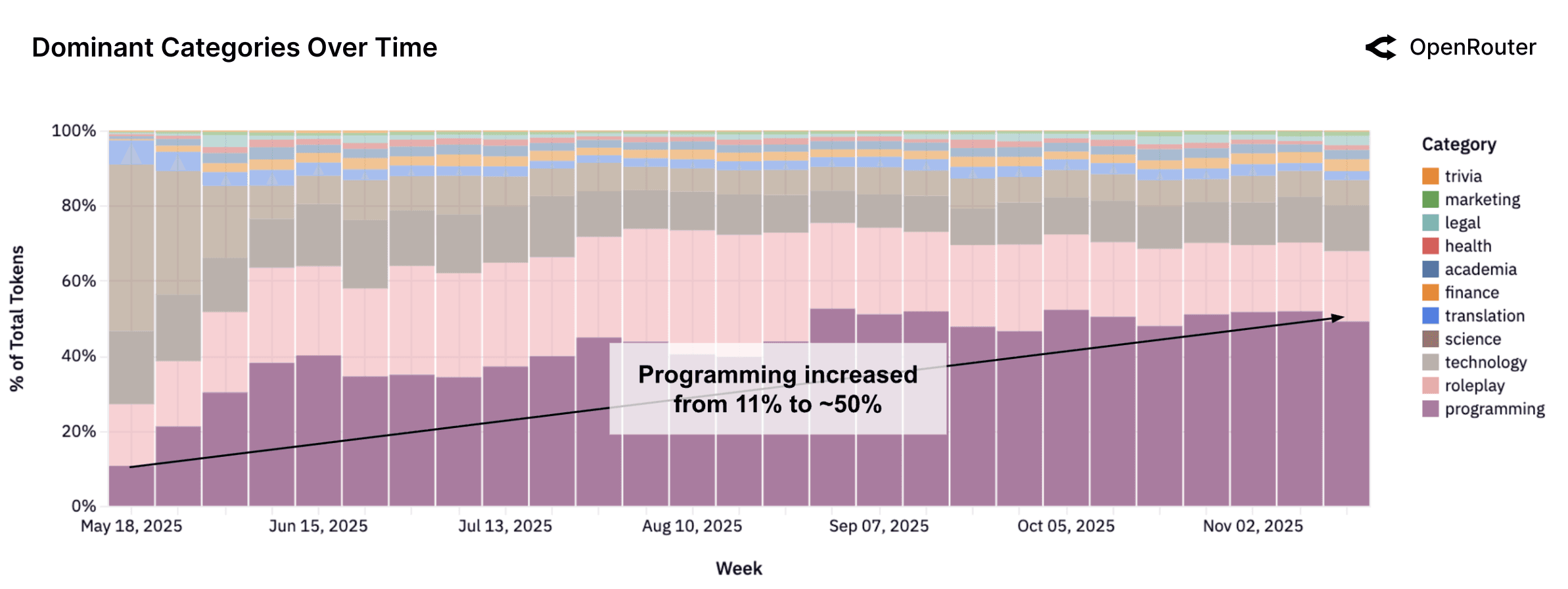

AI-assisted coding has become a dominant workload in software development, with coding representing one of the fastest-growing uses of AI according to OpenRouter's January 2026 State of AI report. The report, which aggregates real production usage across multiple model providers, reveals that developers are not just using AI for autocomplete suggestions—they're deploying autonomous agents that write, refactor, review, and deploy code with minimal human oversight. This shift from AI as a productivity tool to AI as critical infrastructure introduces security risks that most teams haven't yet addressed.

AI has quietly crossed a threshold in software development. It's no longer a novelty or a productivity hack—it's part of the critical path to production. Code is being written, refactored, reviewed, and deployed with AI in the loop at every step. OpenRouter's State of AI report makes this impossible to ignore, offering a rare system-level view into how models are actually used in production across providers and workloads.

What's less obvious, but far more important, is what this means for security. Speed has increased dramatically. Oversight has not. Most teams are still treating AI-generated code as helpful autocomplete rather than what it really is: untrusted, executable input that bypasses traditional code review processes and security controls.

Why the State of AI Report Matters for Developers

OpenRouter's report isn't a benchmark leaderboard or a marketing deck. It's an aggregate view across real usage: different models, different providers, different workloads, all routed through a single abstraction layer.

That matters for developers because it shows what people are actually doing with AI, not what they claim to be doing.

A few things the report is especially good at:

- Showing which workloads dominate token usage

- Highlighting how fast model preferences change

- Revealing the rise of long-context, tool-using, agentic systems

What it doesn't do explicitly is talk about security. But if you read it through a security lens—especially a coding security lens—the implications are hard to miss.

In this post, I'm focusing on what the report tells us about:

- AI-assisted coding as a primary workload

- The shift from suggestions to autonomous agents

- Where new security risks are emerging

- What teams should do now, before incidents force the issue

AI Coding Is the Dominant Use Case—and Still Accelerating

AI coding security starts with scale

One of the clearest signals in the State of AI report is how much AI usage is tied to coding-related tasks. Code generation, refactoring, debugging, test writing, configuration, and infrastructure definition all show up as high-volume workloads.

Figure 1: AI coding workloads show significant growth and dominance across OpenRouter's usage data (Source: OpenRouter State of AI Report)

This matters because code is not just text. It's instructions that get executed.

Compared to other AI outputs:

- A bad summary is annoying

- A bad image is cosmetic

- A bad line of code can ship a vulnerability to production

The report also shows a preference for:

- Long-context models (entire repos, not snippets)

- Multi-step interactions

- Tool use and orchestration

All of this points to a world where AI isn't just helping developers write code—it's actively shaping system behavior.

Takeaway: AI-generated code now sits directly on the path to production, which makes AI coding security a first-order concern, not an afterthought.

From Autocomplete to Agentic Coding Systems

The quiet shift most teams underestimate

Early AI coding tools behaved like smart autocomplete. You typed, the model suggested, you accepted or rejected. Human judgment was the bottleneck.

That's no longer the dominant pattern.

The report shows increasing use of:

- Autonomous or semi-autonomous agents

- Tool invocation (file I/O, package installs, test execution)

- Multi-file, multi-commit changes

- Longer uninterrupted runs

In practice, this looks like:

-

"Fix all lint errors across the repo"

-

"Migrate this service to a new framework"

-

"Set up auth, database, and deployment"

These are not small suggestions. They're architectural changes.

Why this matters for security

Agentic systems change the risk profile dramatically:

- Fewer human checkpoints

- Larger blast radius per task

- Harder attribution when something goes wrong

- Increased likelihood of subtle, systemic vulnerabilities

Once an agent is trusted to act, the question becomes not "is this code correct?" but "what authority did we just grant this system?"

Takeaway: As coding becomes more agentic, AI coding security becomes a systems problem, not a code review problem.

Security Is Mostly Implicit—That's the Core Problem

What the report doesn't explicitly say

OpenRouter's report is descriptive, not prescriptive. It shows what is happening, not what should happen. But one thing is notably absent: any notion of security guarantees around generated code.

There's no concept of:

- Secure-by-default outputs

- Provenance or traceability

- Policy-aware generation

- Risk scoring

That absence matters because most developers implicitly trust AI outputs—especially when they "look right" and pass tests.

Why this is dangerous

Large language models are trained on:

- Public repositories

- Legacy codebases

- Vulnerable patterns that "worked" historically

They optimize for:

- Plausibility

- Speed

- Task completion

They do not optimize for:

- Least privilege

- Threat modeling

- Defensive defaults

Real-world failure modes we see repeatedly include:

- Insecure authentication flows

- Hardcoded secrets or tokens

- Unsafe deserialization

- Misuse of modern frameworks' server-side features

AI doesn't invent these problems—but it scales them.

Takeaway: AI coding security fails quietly, because the output usually "works" until it's exploited.

The Emerging Risk Stack for AI-Generated Code

To reason clearly about AI coding security, it helps to think in layers.

-

Model-level risk

- Training data includes vulnerable code

- No guarantee of up-to-date best practices

- Security advice varies wildly by model

-

Prompt-level risk

- Ambiguous or underspecified instructions

- Over-optimization for speed

- No explicit security constraints

-

Agent-level risk

- Autonomous execution

- Tool access

- Broad permissions

-

System-level risk

- CI/CD pipelines trust generated code

- Reviews don't scale with velocity

- Little to no audit trail

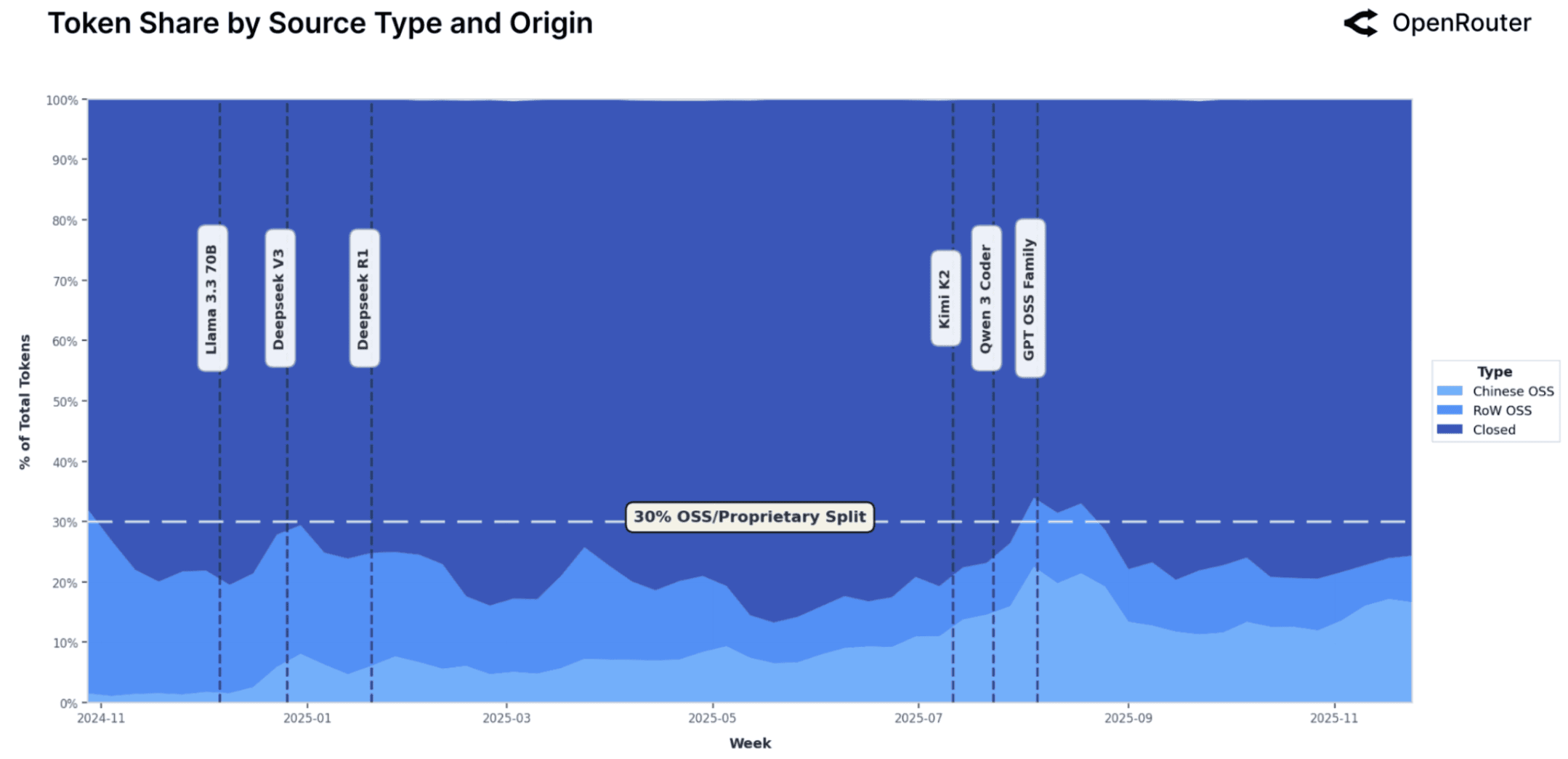

Figure 2: Rapid model switching and orchestration patterns create security consistency challenges (Source: OpenRouter State of AI Report)

The State of AI report shows rapid model switching and orchestration. From a security perspective, that means:

- Inconsistent behavior

- Harder reproducibility

- Fewer stable assumptions

Takeaway: Traditional AppSec tools weren't designed for a world where code is generated dynamically at scale.

What Developers and Teams Should Do Now

Practical steps that actually work

You don't fix this by slowing developers down. You fix it by automating security at the same layer as automation itself.

-

Treat AI output as untrusted input

This is the mental shift everything else depends on.

-

Automatically scan AI-generated code

Static analysis, dependency scanning, and policy checks should run by default—especially on AI-authored changes.

# Example: flag AI-generated diffs for extra scanning git diff origin/main...HEAD | security-scan --ai-mode -

Log provenance

At minimum, record:

- Model used

- Prompt or task description

- Agent vs human origin

-

Constrain agent permissions

Agents don't need production credentials, global write access, or deploy rights by default.

-

Train developers on AI-specific risks

Most developers understand SQL injection and XSS. Far fewer understand how AI changes the threat model.

Takeaway: You don't choose between speed and security—you choose whether security is manual or automated.

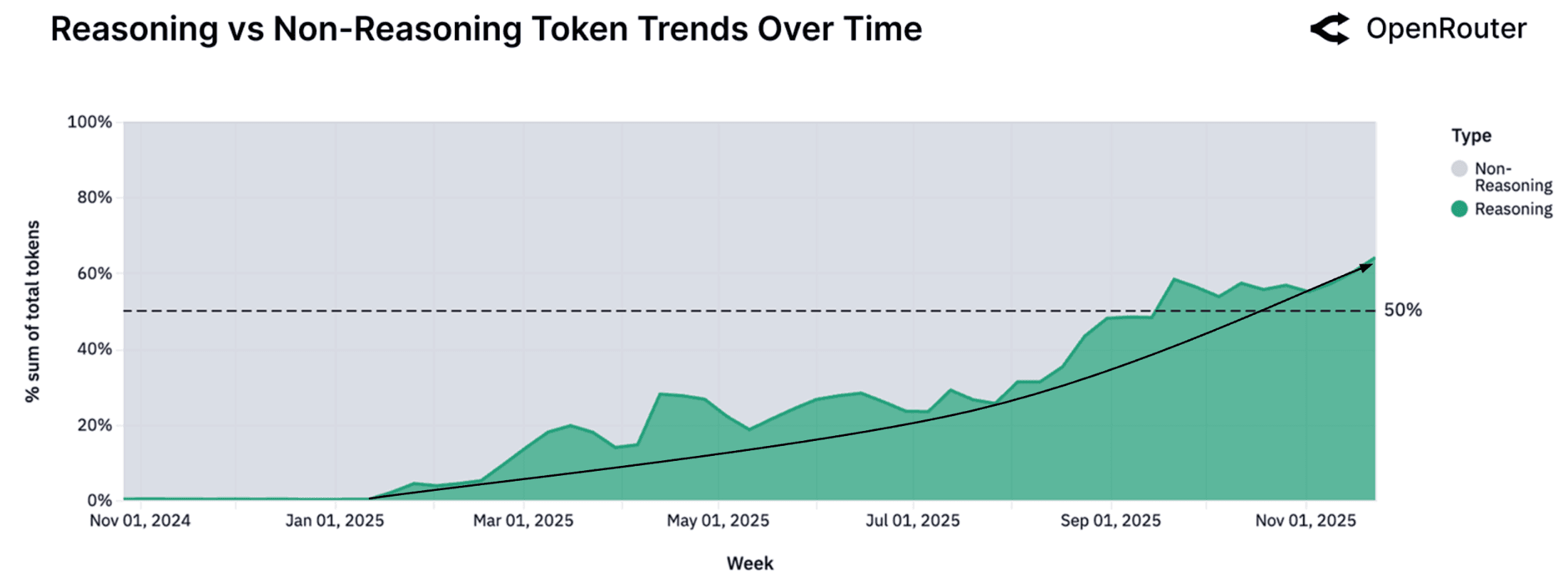

What the State of AI Implies About the Future of Coding

The most important insight from OpenRouter's report isn't about which model is winning this quarter. It's about trajectory.

Figure 3: Projected growth in autonomous coding systems and agentic workflows (Source: OpenRouter State of AI Report)

We are moving toward:

- More autonomous coding systems

- Larger scopes of responsibility

- Faster iteration loops

In that world, security becomes:

- A differentiator between tools

- A liability for teams that ignore it

- A design constraint, not a checklist

At Rafter, we think the next generation of coding tools won't compete on raw intelligence alone. They'll compete on how safely they let you move fast.

Takeaway: The future of AI coding isn't just smarter models—it's safer systems.

Conclusion: The State of AI Is a Security Question

OpenRouter's State of AI report makes one thing clear: AI-assisted coding is no longer optional or experimental. It's already foundational. But the report also shows how quickly responsibility is shifting from humans to systems—often without corresponding guardrails.

Your security adaptation plan:

- Treat AI-generated code as untrusted input - Implement the same validation and security scanning you'd apply to any external dependency or third-party code

- Scan every AI-generated commit - Integrate automated security scanning (like Rafter) into CI/CD to catch hardcoded secrets, injection vulnerabilities, and insecure patterns before merge

- Audit AI agent permissions - Review what your AI coding agents can access, modify, and deploy; apply least-privilege principles to limit blast radius

- Establish review gates for autonomous changes - Require human review for AI-generated code that touches authentication, authorization, payment processing, or data access layers

- Monitor AI-generated code quality - Track error rates, security issues, and production incidents for AI-written code separately to understand actual risk vs. perceived productivity gains

- Update security training - Ensure your team understands AI-specific attack vectors like prompt injection, model poisoning, and data exfiltration through AI outputs

AI is writing a significant share of production code. Agentic workflows magnify both speed and risk. Security practices have not yet caught up. Teams that adapt early will avoid painful lessons later. If you treat AI as a power tool, you'll get power—and accidents. If you treat it as infrastructure, you'll design for safety.

Related Resources

Securing AI-Generated Code: Best Practices

Common Web Security Pitfalls in Modern Frameworks

CI/CD Security Best Practices Every Developer Should Know

AI Builder Security: 7 New Attack Surfaces You Need to Know

Need Help Securing Your App?

AI-generated code introduces new security risks that traditional tools miss. Rafter helps you catch security issues before they become emergencies.